Enterprises are accelerating AI initiatives across every sector, but expectations are advancing faster than operational readiness. Many leaders assume AI systems will review data, update critical systems, manage workflows, and reduce risk. In practice, AI can recommend actions, but it cannot assume responsibility for outcomes.

Every production environment still requires a human owner for decisions that affect customers, finances, safety, compliance, or operations. This issue examines why accountability remains central to AI adoption and why organizations that overlook it encounter instability.

. The Expectation Gap

AI is being asked to:

-

Analyze large volumes of data

-

Recommend or execute system updates

-

Automate multi-step workflows

-

Detect anomalies and risks

-

Operate continuously at scale

These expectations reflect real progress in AI capability. They also obscure a critical limitation. AI systems do not understand organizational context, regulatory nuance, or downstream consequences. They generate outputs based on probability, not accountability.

When AI contributes to a material error in patient records, financial data, supply chain configuration, or contractual terms, the responsibility remains with the organization. Someone must own the decision.

2. The Missing Element in Many AI Programs

Many AI initiatives launch without clearly defining who reviews outputs, approves changes, or intervenes when results are incorrect. This creates gaps in:

-

Governance

-

Quality assurance

-

Compliance

-

Auditability

-

Operational safety

Speed without ownership introduces risk. AI can propose changes, but without an accountable reviewer, those changes can propagate errors across systems.

AI expands operational capability. It does not replace responsibility.

3. Mount Everest Claims vs Actual Capability

Across agencies, consultancies, and vendors, AI achievements are often described as transformational breakthroughs. Some claim full automation, end-to-end autonomy, or enterprise-wide AI transformation.

In many cases, the underlying delivery is limited:

-

Interfaces wrapped around ChatGPT or similar models

-

Prompt-based output without system integration

-

Minimal interaction with enterprise data pipelines

-

No governance, escalation, or rollback mechanisms

-

Limited applicability in regulated environments

The gap between claims and real operational capability creates confusion for enterprise buyers and increases implementation risk.

4. Signals From Microsoft: Progress Requires Discipline

Microsoft’s decision to moderate the pace of certain AI rollouts reflects enterprise reality. The challenge is not innovation speed. It is operating AI inside environments that require stability, predictability, and compliance.

Enterprise systems demand:

-

Deterministic behavior

-

Clear audit trails

-

Security controls

-

Regulatory alignment

-

Defined ownership

This approach reflects a broader industry shift. AI capabilities must mature within governance frameworks that match the environments they serve.

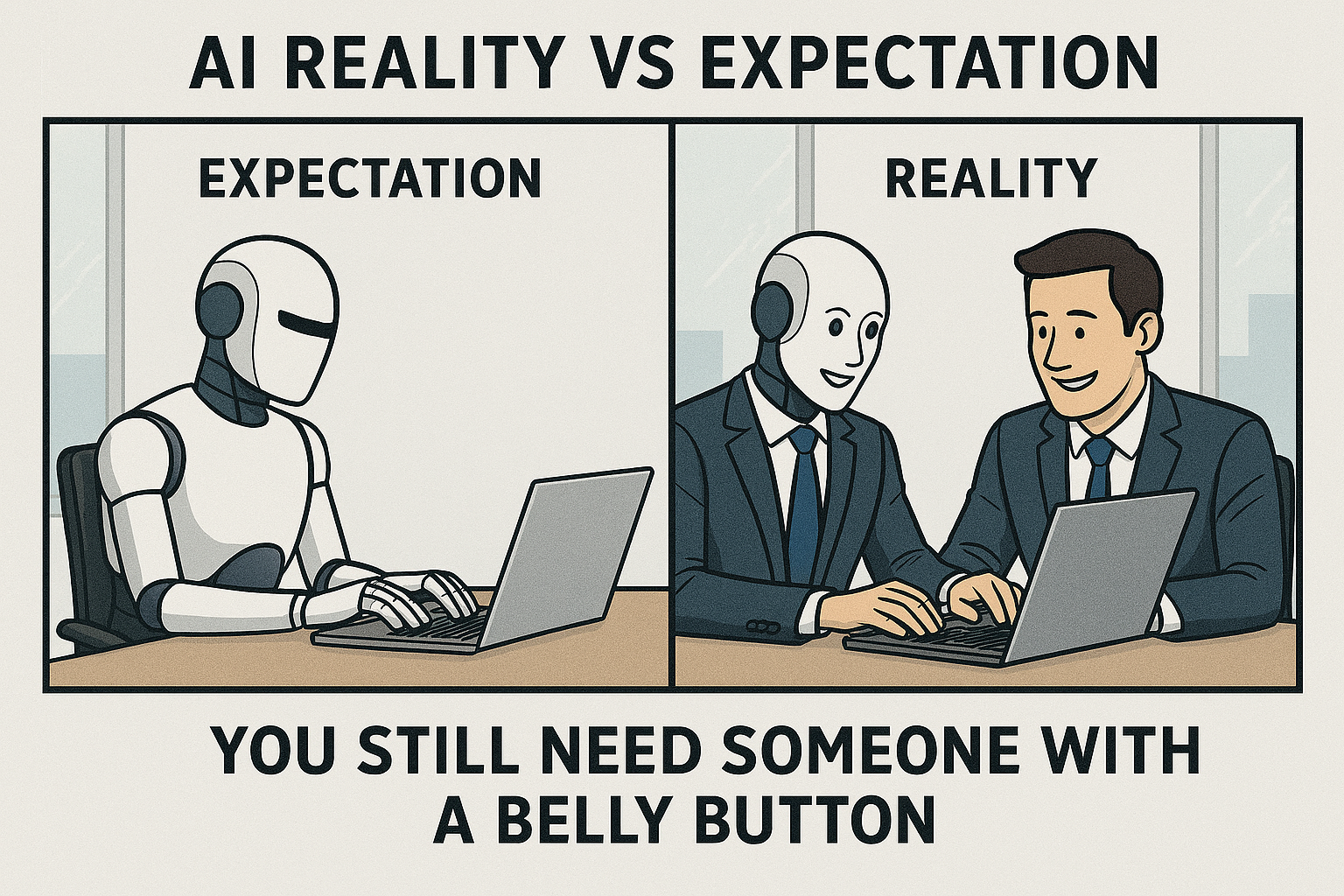

5. The Belly Button Rule

Every AI-enabled workflow requires a clearly identified owner. This person is responsible for:

-

Reviewing AI-generated recommendations

-

Approving changes before systems are updated

-

Monitoring ongoing accuracy

-

Responding to exceptions and failures

-

Documenting decisions for audit and compliance

AI can assist, accelerate, and recommend.

A human must remain accountable.

Organizations that fail to assign ownership encounter stalled projects, unclear escalation paths, and heightened operational risk.

6. Where Execution-Focused Partners Fit

Deploying AI responsibly requires more than model selection. It involves engineering, workflow design, data readiness, integration, and governance.

Execution-focused partners support this by:

-

Mapping workflows into structured AI tasks

-

Preparing data environments for reliability and scale

-

Integrating AI with ERP, clinical, financial, and operational systems

-

Implementing guardrails, review layers, and monitoring

-

Supporting long-term operations

-

Advising leaders on realistic capabilities and limitations

These capabilities help enterprises adopt AI without sacrificing control or stability.

7. A Desk-Side Reference for Leaders

Executives can use the following as a simple, durable cheat sheet:

-

AI and ML: systems that decide

-

Neural Networks: systems that perceive

-

GenAI: systems that communicate

-

Agentic AI: systems that act

This model clarifies conversations with teams, boards, and vendors without requiring technical depth.

Closing Insight

AI increases speed, scale, and analytical reach. It does not remove the need for accountability. Organizations that treat AI as a decision-maker introduce risk. Organizations that pair AI capability with clear ownership, review, and governance create durable operating models.

AI can scale work. Responsibility remains human.